The Time Is Now for a Stronger Private/Public Collaboration with AI

Could AI usher in a new era of cooperation between the public and private sector? I’ve been thinking about this question a lot as I watch AI business leaders call upon legislators to play a more active role in regulating AI. For example, in his first appearance before the U.S. Congress, Sam Altman, CEO of OpenAI, implored lawmakers to regulate AI.

“I think if this technology goes wrong, it can go quite wrong. And we want to be vocal about that,” he said to the Senate Judiciary Privacy, Technology and the Law Subcommittee. “We want to work with the government to prevent that from happening.”

He is not the only one calling for regulation. Alphabet CEO Sundar Pichai wrote in The Financial times that “AI needs to be regulated in a way that balances innovation and potential harms. “I still believe AI is too important not to regulate, and too important not to regulate well.”

Pichai recommended that governments, specialists, scholars, and the general public actively participate in the dialogue when formulating policies to guarantee the security of AI technologies. Additionally, he emphasized the importance of nations collaborating to establish stringent regulations.

It’s fascinating to see business leaders openly call upon the public sector to regulate what the private sector created. Contrast this stance with consumer privacy, where the private and public realms are often at odds. So, why do we see such a stark difference? I believe the private sector is coming to grips with the reality that they’ve unleashed something that they do not fully understand. This is coming to light especially as large language models continue to surprise and confound AI experts by behaving in unpredictable ways.

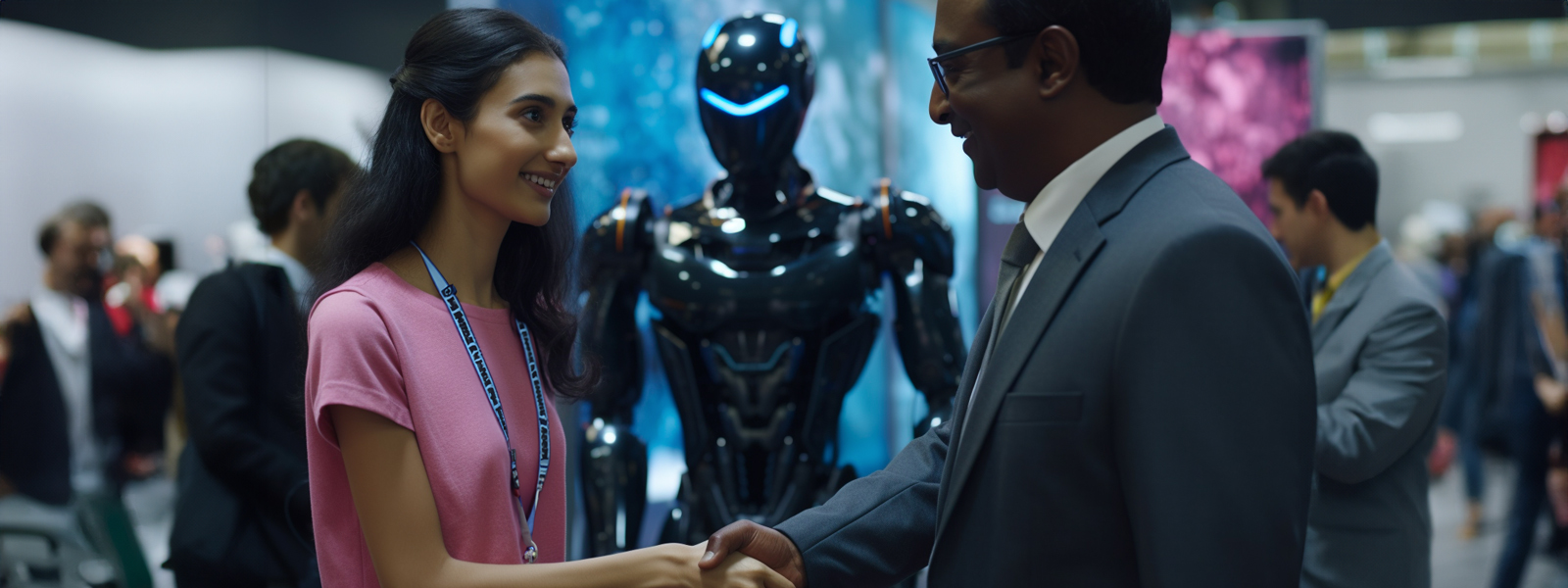

But the relationship between the public and private sectors can become far more interesting and productive when the conversation about AI extends beyond regulation. Yes, regulation is essential at this point, and governments around the world are already taking steps. But by cooperating, businesses and governments can also harness the power of AI in some incredibly imaginative and powerful ways for the betterment of society. Consider, for example, some collaboration that has been happening in recent years:

- Google's DeepMind worked with the UK's National Health Service (NHS) on AI-driven health research, including developing algorithms to detect eye diseases from medical images. But this is certainly not the only time Google has worked with the public sector for the public good.

- Microsoft partnered with several universities and government organizations worldwide to implement FarmBeats, a project using AI and IoT to enable data-driven farming.

We are just in the early days of collaboration, but the potential for AI to improve society is exciting. Recently the Biden Administration announced a $140 million investment in seven new AI research institutes as part of a stronger collaboration between the government, private sector, and academia to encourage the uptake of AI. This is a baby step ($140 million does not seem like a lot of money considering the fact that in FY2023, the Department of Defense had $2.04 trillion distributed among its various components). But it’s a necessary step.

For a stronger relationship to take hold, a number of things need to happen, including:

- Humility. Business leaders need to be more open about admitting what they don’t know about AI.

- Knowledge. Legislators need to learn more about AI, a challenge that has attracted some criticism.

- Action. As noted, the relationship needs to move beyond regulation (while including it) to include commitments to improve the world. These commitments need to be shared publicly to hold everyone accountable.

At Centific, we are collaborating with the private sector to apply AI more responsibly while at the same time to ensure that AI delivers business value. I for one am encouraged about the ways that businesses can evolve AI safely and productively as the private and public sectors cooperate for good.